What is Data Poisoning?

Data poisoning is a sophisticated form of adversarial AI attack. Its goal is to compromise the confidentiality, integrity, or availability of an ML model by manipulating the data it learns from. Since ML models learn patterns and relationships from their training data, introducing 'poisoned' samples causes the model to learn incorrect or unintended associations.

Imagine teaching a student by providing them with a textbook where a small percentage of facts are subtly wrong. The student may learn and rely on those false facts, appearing competent most of the time, but failing predictably when those specific corrupted pieces of information are relevant. This is the essence of a data poisoning attack.

The manipulation can occur in three primary ways:

Categories and Types of Poisoning Attacks

Poisoning attacks are typically categorized by their objective, leading to a spectrum of malicious outcomes.

1. By Objective: Targeted vs. Non-Targeted

Non-Targeted (Availability) - To degrade the model's overall performance and reduce its accuracy, essentially rendering the system unreliable or unusable (a denial-of-service on the model). Causes a widespread, noticeable drop in accuracy across many inputs. The Stealth Level is relatively low; the performance drop is a clear symptom.

Targeted (Integrity/Backdoor) - To manipulate the model's output only for specific, pre-defined inputs (the "trigger"), while leaving its performance largely unaffected for all other inputs. The model performs normally until it encounters a specific trigger, at which point it executes the attacker's malicious behavior. The Stealth Level is High; the model appears clean during testing and normal operation.

2. Specific Attack Techniques

Specific techniques are often used to achieve the objectives of targeted or non-targeted poisoning:

Real-World Example: A facial recognition system is trained with a small set of images of a target person that all include a tiny, hidden yellow square in the corner. When deployed, the model will misclassify any person as the target only when the yellow square is present in the input image.

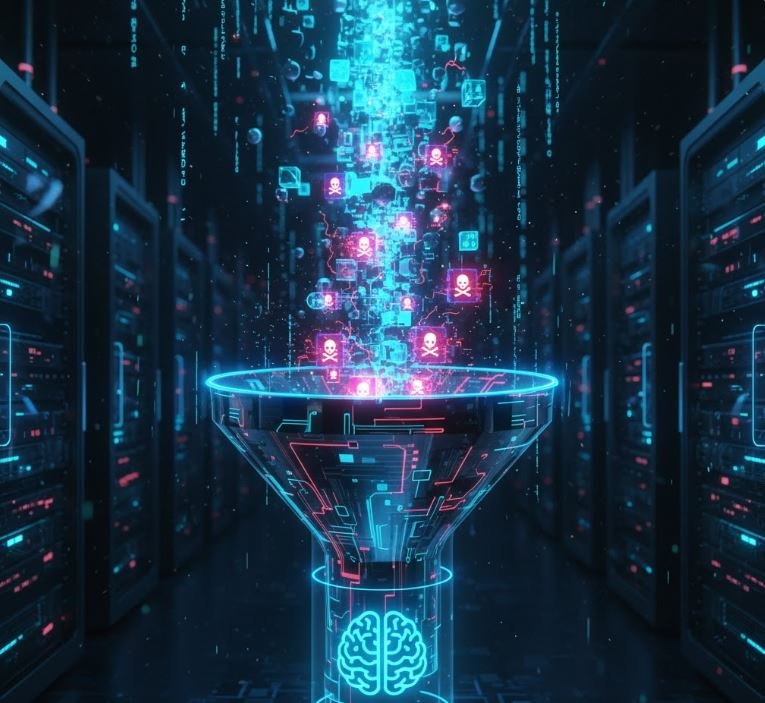

Attack Vectors: Where Does the Poison Enter?

The success of a poisoning attack depends on the attacker's ability to inject data into the ML system's data pipeline. This is becoming easier as the AI ecosystem grows more complex.

Supply Chain Attacks: This is the most prevalent vector for many models. AI/ML models increasingly rely on open-source, publicly available datasets (like those found on Hugging Face or public web-scraped data) for training and fine-tuning. If an attacker can inject poisoned data into one of these trusted, upstream data sources, every model subsequently trained on that data is compromised.

-The Nightshade Project is a prominent example where artists can subtly alter their images to "poison" the training data of generative AI models that scrape the web, causing them to learn undesirable and unpredictable behaviors (e.g., confusing a cow with a leather handbag).

Insider Threats: Employees, contractors, or other individuals with authorized access to the internal data pipeline, training infrastructure, or data collection systems pose a significant threat. They can intentionally or accidentally introduce poisoned data.

Compromised Data Systems: If the system responsible for collecting or storing training data is breached, an external attacker can gain the necessary access to manipulate the datasets before they are used for model training.

Consequences and Real-World Impact

The ramifications of a successful AI/ML poisoning attack extend far beyond simple technical inconvenience, potentially causing real-world damage and systemic risk.

Financial and Economic Damage: Poisoned models in financial trading or fraud detection systems could lead to bad investment decisions, unauthorized transactions, or significant financial loss.

Safety and Life-Critical Failures: In autonomous systems, such as self-driving cars, a targeted attack could cause the vehicle to misclassify a stop sign as a speed limit sign under specific environmental conditions, leading to catastrophic failure.

Bias and Discrimination: Attackers can systematically poison data to introduce or amplify biases against specific demographic groups in models used for loan approvals, hiring, or criminal justice, leading to unfair and discriminatory outcomes.

Erosion of Trust: A series of well-publicized poisoning incidents can severely undermine public and organizational trust in AI technology, slowing adoption even for beneficial applications.

Data Exfiltration (via backdoors): A backdoor injected into a model could be exploited to exfiltrate sensitive or proprietary training data by using the trigger to make the model reveal information it shouldn't.

Defenses and Mitigation Strategies

Defending against data poisoning requires a comprehensive, multi-layered approach that addresses security at every stage of the ML lifecycle, from data acquisition to model deployment.

1. Data Validation and Sanitization (Prevention)

The first line of defense is ensuring the integrity of the data before it enters the training pipeline.

Outlier/Anomaly Detection: Employ statistical methods, clustering algorithms (like DBSCAN), or even pre-trained ML models to automatically identify data points that deviate significantly from the expected distribution. These anomalies can be flagged as potential poison and removed.

Data Provenance and Integrity Checks: Implement robust tracking of all data sources and modifications (data lineage). Use cryptographic checksums or hashing functions to verify that the data has not been altered during transmission or storage.

Principle of Least Privilege (POLP): Strictly limit who and what systems have permission to modify training data, thereby reducing the attack surface for insider threats or credential compromises.

2. Robust Training Techniques (Mitigation)

These methods aim to build models that are inherently resilient to corrupted data points.

Adversarial Training: While primarily designed against evasion attacks, this can be extended to include training the model on known poisoned or outlier examples to improve its ability to correctly classify or ignore them.

Robust Aggregation: For methods that use decentralized data (like Federated Learning), employ robust aggregation techniques (e.g., trimmed mean, median-of-means) that are less susceptible to the influence of a small fraction of highly corrupted data points.

Robust Loss Functions: Use loss functions that are designed to minimize the impact of outliers on the overall training process.

3. Continuous Monitoring and Auditing (Detection and Response)

Vigilance after deployment is crucial, as some targeted attacks are designed to be stealthy during training.

Model Performance Monitoring: Continuously monitor key performance metrics like accuracy, precision, and recall on a golden dataset (a small, clean, trusted set of evaluation data). Sudden, unexplained drops or unusual spikes are red flags.

Activation Clustering: Analyze the internal state or activations of the model's hidden layers. Poisoned samples often cause unique, detectable activation patterns that can be clustered and isolated.

Behavioral Auditing (Red Teaming): Proactively test the deployed model using a range of adversarial examples and known triggers to check for unintended or malicious behavior, especially when integrating new data.

The Road Ahead

AI/ML poisoning attacks represent a fundamental security challenge, as the attack target shifts from the model's code to its knowledge base (the data). As AI systems become more ubiquitous, integrated, and autonomous, the incentive for sophisticated adversaries to compromise their integrity will only grow. The future of AI security depends on the industry moving beyond traditional cybersecurity practices and adopting a holistic, data-centric security paradigm. This includes developing new algorithms inherently resilient to data contamination, establishing transparent and verifiable data supply chains, and mandating stringent security protocols for all AI training pipelines.

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0